Life and business today are hugely reliant on the collection, processing, sharing and analysis of information. From the adverts we see, to the music we listen to and the way we do our jobs, data is a driving force in the background.

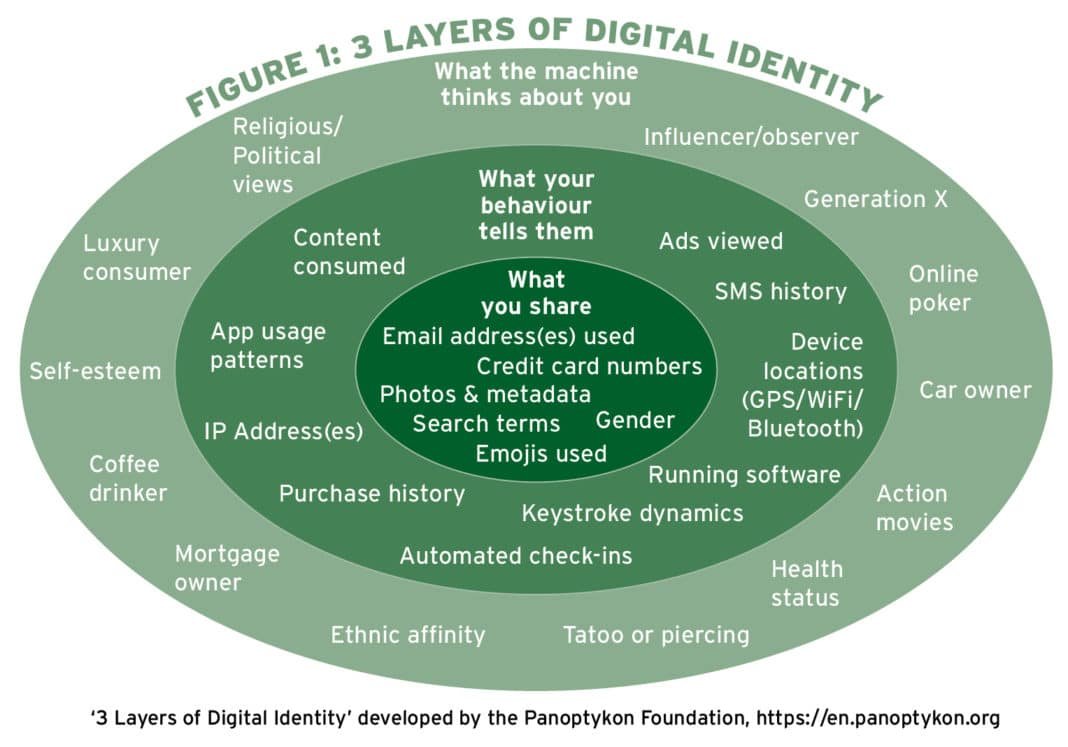

And, every day, people around the world generate and give away massive and unprecedented amounts of personal data, often without even realising it.

Data protection laws have been solidified and increasingly enforced in jurisdictions around the world, introducing privacy and data security as important global legal and business issues. The emergence of this new climate has brought concepts of fairness, integrity, honesty, transparency, accountability and trust into the spotlight (see Figure 1, below).

Trust and related terms are often used loosely and, as a result, public trust in governments, financial services institutions, the media and technology companies has dipped to a historic low. A Pew Research study from 2017 found that only 18 per cent of Americans today say they can trust the government in Washington to do what is right ‘just about always’ (three per cent) or ‘most of the time’ (15 per cent).[1] In 2018, Gallup reported that 40 per cent of survey respondents have very little or no confidence in newspapers and only one-third have ‘a great deal’ or ‘quite a lot’ of confidence in banks.[2] These numbers are alarmingly low when compared to survey responses from the previous decade.

Broadly, consumer, client and shareholder trust in business has eroded. Yet, because trust is so vital to economic stability and growth, corporations are now under pressure to restore and maintain a foundation of accountability and reliability. Doing so requires a holistic approach that imbues ethics and transparency throughout the data lifecycle from the moment it’s collected, to how it is used, shared and disposed.

A fragile new currency

Our digital economy runs on data and entire industries are built upon the sole purpose of selling, enriching and trading it. Thus, trust has become a valuable, albeit delicate, currency in the system.

For years, many organisations have communicated to employees that they should have no expectation of privacy at work, or when using employer-provided devices. This type of policy is common, as has been similar thinking regarding consumer privacy rights. At the core of this mindset is the presumption that data is owned solely by the institution and may be managed and monetised in any way the institution chooses. However, as data breaches have proliferated and individuals have increasingly challenged their service providers and employers to protect their data, the dynamic is shifting.

There have been countless instances of businesses being publicly accused of discrepancies between their statements and claims around consumer trust and actual practices. Privacy advocates and consumer watchdogs are increasingly raising questions about various businesses and their activities and warn about the dangers of initiatives to assign and monitor consumer trust scores or social credit scores. Technology is further complicating these issues, with advanced data analytics and surveillance tools powered by artificial intelligence (AI) coming into widespread use. To this end, the EU has published an early draft of guidelines for ‘trustworthy’ AI, which is defined as having principles that include: do good, do no harm, preserve human agency and operate transparently.

Ethics in data processing

Alongside the changing trust matrix, data ethics have emerged as a new branch of applied ethics that describes the judgements and approaches made when generating, analysing and disseminating information, including the application of data protection law and use of new technologies. The UK’s Department of Digital, Culture, Media & Sport has issued a framework for data ethics to guide the design of appropriate data use in government and the wider public sector.[3] It provides insight around government expectations and best practices for information management.

However, because data is so diverse and its application is highly context specific, developing a universal code for data ethics is challenging. Data use in the medical field versus financial services versus criminal investigations will vary widely. This is why organisations must develop their own unique, internal data ethics framework, which can encompass compliance with regulatory obligations, such as the General Data Protection Regulation (GDPR), and a balance between the commercial value of their data and its ethical use.

Many organisations struggle with creating such a framework and are often stuck without clarity on where to begin. Common questions and considerations that should be evaluated at the outset of this effort include:

- Is the organisation aware of the full extent of information that has been disclosed, how it is tracked, stored and documented and communicated to data subjects?

- Which entities control or own data that has been or is regularly shared or sold?

- How is/was consent obtained? Was the request for consent specific, informed, unambiguous and revocable at any time?

- Would the data subjects be surprised to learn that their data is being retained forever, or if it was collected in the first place?

- Are the uses of the data consistent with the intentions that were originally communicated to the data subject?

Information governance to enable trust

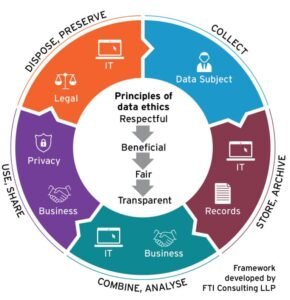

Compliance obligations have also become increasingly demanding and, in addition to combatting distrust, many organisations are also battling compliance fatigue. Ethics and a company culture based on privacy can help manage unforeseen regulatory risks and support compliance needs at the same time. The principles of information governance (IG) can help to align legal, privacy, business and user requirements, clarify stakeholder obligations and proactively identify potential compliance gaps (see graphic below).

Tried and true IG principles and considerations that should be examined together with data ethics principles, including:

Respectful: Data processing and use should always align with the original purpose that was communicated to the data subject. When GDPR was first activated in 2018, individuals experienced a tsunami of emails and consent popups, which, ironically, resulted in many ignoring notices that were intended to secure consent and/or promote transparency. Organisations must ensure that the context of the original basis for data processing (e.g. consent) is respected and have a methodology in place that makes it possible to maintain and track these activities.

Beneficial: A tension exists between protecting individuals whilst enabling innovation. Where data-processing activities have a potential to impact individuals, the benefits and potential risks of the data processing activity should be defined, identified and assessed. This may be incorporated as part of conducting a data protection impact assessment or an ethical data impact assessment.

Fair: Avoid actions that seem inappropriate or might be considered offensive or cause distress. The accuracy and relevancy of data should be regularly reviewed to reduce errors and uncertainty and algorithms should be evaluated for bias or discrimination.

Transparent: Corporations need to be accountable for the types of processing activities being done so that expectations of the individuals to whom the data relate and/or the individuals who are impacted by the data use are considered. Also, visibility into a business’s data processing activities is only valuable if the audience can understand what is being shared. This means that organisations have a responsibility to explain in an honest, accurate and digestible way how their AI, data algorithms or anonymisation/pseudonymisation tools work. Decisions made about an individual and the decision-making process should be explainable and reasonable.

Some groups have begun to explore the possibility of a universal ‘Hippocratic Oath’ for data use. Bloomberg has teamed up with BrightHive and Data for Democracy to tackle this, launching the Community Principles of Ethical Data Sharing (CPEDS), which provides a set of guidelines on responsible data sharing and a code of ethics for data scientists. It is important for businesses to stay abreast of these types of developments and explore how involvement in or adoption of proposed principles may work for their business.

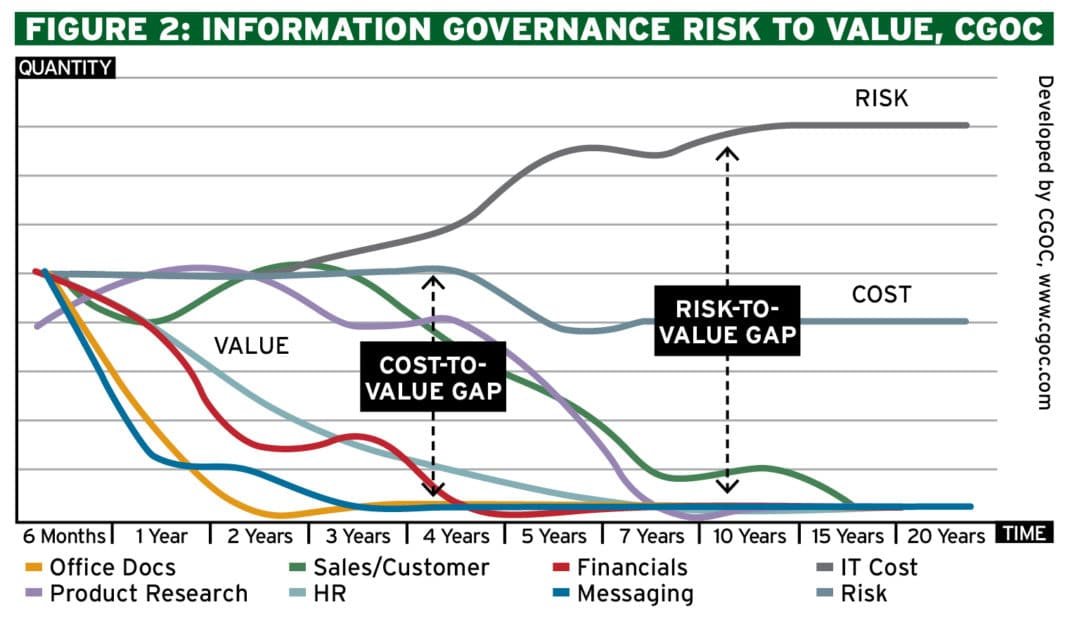

Before the advent of data protection laws, most organisations took the approach of gathering as much data as possible and saving everything, just in case it was needed. This is no longer acceptable. GDPR forces corporations to think about the purpose and intent of data collection in the first place. Does it really bring value, or is it being collected just because it always has been? Is the organisation storing certain information because ‘data is the new oil’? It is important to weigh the value of the data against the liability associated with unlawful processing and the risk to the individual. Not all data is of equal value and data depreciates over time. Therefore, disposal and remediation should be viewed as methods to reduce cyber risk, secure sensitive data and improve the value of analytics (see Figure 2).

The heart of IG is to bring together a robust framework that connects stakeholders and information. The relationship between them is always evolving and, as such, should be periodically reviewed and benchmarked to keep up with rapidly changing technology and processes. Governance practices need to be holistic and revisited regularly and should be managed by collaboration across IT, legal, privacy, data teams and the C-suite, to ensure ongoing transparent practices for ethical governance.

Understanding the true value of data

Data as an asset is not a new concept, but recent research shows that information is more valuable than ever before and corporations are now looking at the business value of data in new ways. The European Commission has forecast that, by 2020, personalised data will be worth €1trillion, almost eight per cent of the EU’s GDP. The World Economic Forum reported that the ‘global data economy is pegged at $3trillion’.[4] Other estimates have valued data at anywhere between 15 and 60 per cent of a company’s total worth.

This begs the question, then, what are we losing by not governing our data? Poor data management can undermine value in many ways. This can manifest in project delays and high storage, legal review and technology costs relating to excessive data volumes. Sanctions and reputational damage resulting from data breaches and privacy violations are additional risks that can result from a lack of holistic IG.

“TECHNOLOGY ADVANCEMENT AND THE INNOVATIVE USE OF DATA IS NOT THE ENEMY, BUT IT IS SOMETHING THAT MUST BE MANAGED AND ADDRESSED IN ORDER TO MAINTAIN A HEALTHY ECOSYSTEM. ORGANISATIONS MUST ENGAGE ABOUT HOW TO USE DATA ETHICALLY”

When making a business case for stronger privacy, teams should communicate to company leadership the ways in which better data management can add value, as well as the ways in which a lack of compliance can damage the bottom line. Data-driven business models have created up to 35 per cent of adjusted gross revenue for companies that execute well. Conversely, Forrester Research estimates that data breaches will reach a consolidated cost of $2.1trillion, or 2.2 per cent of the global GDP, this year. In 2018, one technology giant lost $120billion in market value following data privacy scandals. Data protection authorities in Europe have begun to issue fines and penalties for GDPR non-compliance and enforcement is expected to ramp up steadily in the coming months and years.

The business case for blockchain

Over the last couple of years, the viability and range of blockchain applications have become clear and the technology has evolved from a theory to a usable, practical solution for many enterprise problems. Simply, blockchain is a distributed peer-to-peer digital ledger, in which transactions are recorded chronologically and publicly. In certain cases, a private blockchain provides all these benefits, but is visible only between approved parties and users. It provides a way to account for and openly track transactions between approved parties and, because it is de-centralised and designed for versatility, it is in and of itself typically secure and difficult to alter or compromise. Initially implemented as the technology behind cryptocurrency, introduced as a disruptor to financial markets, blockchain uses are now emerging across a wide range of industries.

Trust, security and transparency are arguably the most significant benefits blockchain provides. Critical to reaping these benefits is for blockchain solutions to be implemented through careful planning and with an organisation’s unique workflows, privacy, regulatory and security needs in mind. Like the introduction of any new technology or system, blockchain use must be vetted across key stakeholders within the organisation, to ensure it operates under existing IG infrastructure. This will avoid compliance and privacy pitfalls and help optimise the ability for blockchain solutions to enable and nurture trust.

The tide of progress will continue to swell forward, regardless of whether organisations try to resist or ride along with it. Technology advancement and the innovative use of data is not the enemy, but it is something that must be managed and addressed in order to maintain a healthy ecosystem. Organisations must engage in transparent, multi-faceted and open dialogue about how to use data ethically. This will help future-proof their business models and ensure they are able to enable innovation and act responsibly.